As Ruby/Rails developers, we love writing tests. Testing is an integral part of software development. Good tests help us write high-quality code. They add value to the overall development process, but if we do not manage the test well, they can slow us down. Here are some of the symptoms of tests that are improperly managed:

- Running tests takes a long time.

- The tests are unreliable and fail randomly.

- The tests behave differently on different machines.

- Running all the test suites slows down your CI.

In this blog post, we will cover how containers can be used to make testing reliable, which is helpful in delivering high-quality software.

Integration Tests and Unit Tests

Before we jump into the topic of containers, let us first understand and be on the same page about the difference between unit and integration tests.

Unit tests are a way to test a block of code in isolation. They help test the functionality of the block of code but not the dependencies. For example, here is a simple method:

def sum(a, b)

a + b

end

This is a function that sums two numbers, which is very simple. Testing this function would be super easy. But what if this function has a dependency on an external service. This method has to write to the database once it completes the sum.

def sum!(a, b)

c = a + b

Sum.create(value: c)

end

In this block of code, the values are added and saved to a database. To test this block of code, we need to have a database server running. If we test this with the database server running, it is no longer a unit test. This needs external dependency, and we would also be testing the database connection and whether the record is saved in the database. In this case, this test is an integration test.

Unit tests can be solitary unit tests(mock/stub all dependencies) or sociable unit tests(allow talking to other dependencies). People have proposed various definitions of unit tests. In this blog context, the type of unit test we are talking about is the solitary unit test.

Removing external dependencies with mocks helps the tests run faster. While using unit tests, we leave out these dependencies, but to make sure the application works as expected, testing the dependencies is equally important. The tests that we write to test these external dependencies, such as database, network calls, and files, are integration tests.

Like unit tests, people have proposed various definitions of integration tests. However, the basic concept is the same. The difference is only in the scope. A small function that writes to a database can be an integration test. A broader functionality that connects multiple parts of the system is also an integration test. The test we’re talking about here is more focused on the narrow part. The narrower the test, the more tests we need to write. With broader tests, however, the number of tests required decreases.

While running an integration test, we need the application and dependencies, such as the database and external services, to be running. Managing these dependencies can be challenging.

Fundamentals of Docker

Docker is a technology that helps abstract an application from its environment(operating system) and run it in isolation with the host. This is similar to a virtual machine but is faster and more efficient. Docker allows us to package our application code and its dependencies into a single container. This helps make the code portable and means that we can have the same Docker image running during development, testing, and production. This will ensure the consistency of the environment in which the application runs. Since it is not dependent on the host server, it makes the application’s behavior predictable in all environments. Docker helps developers run and manage the application and its environment, including building, running, deploying, and updating using simple commands.

Let us understand some important terminologies:

Dockerfile - A Dockerfile is simply a file where we define the dependencies required for the application. We can specify the operating system it needs, database client libraries, or any packages required by the application. Here, we also define the command required for the application to run. A Dockerfile starts from a base image. These base images could be an operating system or a programming language.

Images - Images are a blueprint that states the steps required for a container to run. Images are built with different layers. Every step defined in a Dockerfile is a layer within the image. This increases reusability and helps in caching the intermediate layer to build the image faster.

Containers - Containers are the actual images. These can be in a stopped state as well. Containers can be started from a docker image. A single docker image can start multiple docker containers. Containers hold information specified by the image. There is a thin container layer where all the changes made to the image while the application is running are stored.

Docker Compose - Docker Compose is a different tool that helps to manage multiple containers. We can have multiple containers from the same docker image. We may also need multiple containers from different docker images, and these containers need to interact with each other. Docker Compose manages the containers. There are other tools available, but in this context, we will only use Docker Compose because of its simplicity.

Virtual Machines are on top of the host hypervisor and need a separate operating system installed, so more host resources are used. However, with Docker, we only need to install a Docker engine in the host operating system, and host resource management is done by Docker. Docker helps make life easier for both developers and sysops, so it is very popular in DevOps culture.

Why Use Docker for Testing

Docker isolates the running application or the test from its host environment with minimal resource consumption. Here are some of the advantage of using docker for testing:

Lightweight: Docker containers are lightweight. This helps to run multiple containers at the same time and without one test process causing an issue in another test process.

Portable: Docker images are portable and can run in any environment. This helps in CI, as we can reuse the same image to run tests in local and CI environments.

Versioning: Using multiple applications or upgrading our dependencies requires different versions of our dependencies in our local machine. This would be very difficult to manage without Docker. With Docker, we can have different containers running with a different version. We can also run tests on different versions and verify things.

Easy management of microservices: With microservices, testing also gets distributed and complicated. This means we need to manage the dependent microservice. Docker and Docker Compose help to manage these service dependencies.

Pre-requisites

To try this out, let's make sure the following are set up:

- Docker

- Docker-compose

- Ruby 2.7 (Optional, as we will add Ruby to the Docker container, but it will be helpful for testing)

- Rails (5.1+ recommended)

Dockerize the App

Let us start by creating a simple Rails app and containerizing it. I am starting with a fresh Rails app. You can also containerize the existing Rails app.

rails new calculator

Create a file named Dockerfile with the following content:

FROM ruby:2.7

RUN curl -sS https://dl.yarnpkg.com/debian/pubkey.gpg | apt-key add -

RUN echo "deb https://dl.yarnpkg.com/debian/ stable main" | tee /etc/apt/sources.list.d/yarn.list

RUN apt-get update -qq && apt-get install -y nodejs yarn

RUN mkdir /calculator

WORKDIR /calculator

COPY Gemfile /calculator/Gemfile

COPY Gemfile.lock /calculator/Gemfile.lock

RUN bundle install

COPY package.json /calculator/package.json

COPY yarn.lock /calculator/yarn.lock

RUN yarn install --check-files

COPY . /calculator

EXPOSE 3000

CMD ["rails", "server", "-b", "0.0.0.0"]

The Dockerfile we defined has a Ruby 2.7 base image. We need NodeJS and Yarn, so the next step is to install them. Once they are installed, we run bundle install and yarn install. Finally, we copy all the code and run the Rails server with a 3000 port exported. This is a very simple Dockerfile. With this, our Rails app is dockerized.

Now we can create and run the docker image to make sure it is running.

Build the docker image:

docker build . -t calculator

Run the container:

docker run -p 3000:3000 calculator

Create a Model with Calculation Logic

Let us create a model called calculation where we can add our calculation logic. We will be using this model to write some logic and tests for them. We can look into how we can manage the dependencies of these tests using Docker Compose.

We are currently running Rails with SQLite. Let us change it to Postgres instead of SQLite. This can be done easily with the following command:

rails db:system:change --to=postgresql

Generate a calculation model:

bundle exec rails generate model calculation

Add some fields in the calculation schema:

# db/migrate/create_calculation.rb

class CreateCalculations < ActiveRecord::Migration[6.0]

def change

create_table :calculations do |t|

t.integer :x

t.integer :y

t.integer :result

t.timestamps

end

end

end

Next, let's try to run the database the migration inside the container:

docker-compose exec web rails db:migrate

Since the database is not running inside the container, running this command will throw an error.

rake aborted!

PG::ConnectionBad: could not connect to server: No such file or directory

Is the server running locally and accepting

connections on Unix domain socket "/var/run/postgresql/.s.PGSQL.5432"?

We can run a Postgres image as another image and add a network between them. To manage this container communication, we can use Docker Compose.

Using Docker Compose to Manage Multiple Containers

With the Rails app containerized, we can add dependencies to the service using Docker Compose. The dependency on the application is Postgres.

The Postgres Docker image is available publicly, so we don't need to create one. However, we do need to manage the database container and our Rails app container.

version: "3.0"

services:

db:

image: postgres

environment:

POSTGRES_USER: calculator

POSTGRES_PASSWORD: password

POSTGRES_DB: calculator_test

web:

build: .

ports:

- "3000:3000"

depends_on:

- db

environment:

CALCULATOR_DATABASE_PASSWORD: password

Now, go to the database.yaml file and change the database to a different host. The host will be the name specified inside the service in the Docker Compose file. In our case, the host is db. Make sure that the DB password is the same in the postgres service and the web service. Also, change the username, password, and database name as specified in the Docker Compose file.

# database.yaml

development:

<<: *default

database: calculator_development

username: calculator

password: <%= ENV['CALCULATOR_DATABASE_PASSWORD'] %>

Now, we can start the containers by using a single command:

docker-compose up

This will start both the database and our Rails server. We can run the migration command now, and it should be able to connect to Postgres and run the migration.

Adding Tests

Now that we have all the setup ready, let us write a simple method inside the model and test the method:

# calculation.rb

class Calculation < ApplicationRecord

def self.sum(x, y)

result = x + y

Calculation.create(x: x, y: y, result: result)

result

end

end

This sum method not only adds the number but also saves the number to a database. So, testing this method requires a database instance to be running.

This test will be an integration test, as we must connect to a database.

We will be using Mini-test to write the test, which is the Rails default.

# calculation_test.rb

require 'test_helper'

class CalculationTest < ActiveSupport::TestCase

test "should sum and save the data" do

result = Calculation.sum(1, 2)

c = Calculation.last

assert result, 3

assert c.result, result

end

end

Run the test:

docker-compose exec web rails test

In the above test, we are testing whether the sum method is adding the values and saving the values in the database. With Docker Compose, we have a very easy way of connecting to the database.

Here, the method is dependent on the database. The dependency can be not only a database but also a third-party service that provides a REST API. So, let us try to use a third-party service that provides a sum method, which we can use instead of writing our own.

# calculation.rb

class Calculation < ApplicationRecord

def self.sum(x, y)

# The hostname should be the same as it is specified in the docker-compose file

url = 'http://sumservice:4010/sum'

uri = URI(url)

params = { :x => x, :y => y }

uri.query = URI.encode_www_form(params)

res = Net::HTTP.get_response(uri)

throw "Error" unless res.is_a?(Net::HTTPSuccess)

result = res.body.to_i

Calculation.create(x: x, y: y, result: result)

result

end

end

We can do something similar for the REST API that we did for the database as a dependency. We need to ensure that these are valid endpoints, but we do not want to make a call to the actual service during the test. For this case, we could create mock/stub tests, but these tests would not be an integration test if we do so. We want to make sure that the test is as realistic as possible. For this purpose, we can create a mock API endpoint, which we will run in a container, and make a call to the mock container API service, which will respond to us with the result.

Let us modify the test first:

# calculation_test.rb

require 'test_helper'

class CalculationTest < ActiveSupport::TestCase

test "should sum and save the data" do

result = Calculation.sum(1, 2)

c = Calculation.last

# we don't need this assetion as we are deligation this responsibility to external service:

# assert result, 3 // Not needed

assert c.result, result

end

end

Here, we have modified the test case to only test whether the result provided by the sum API is saved properly in the database. We do not need to test whether the actual value of the sum is correct, as this is handled by the external service, and we trust them.

To generate a mock server, we will be using openAPI. Let us create a simple swagger definition:

Create a new folder in the root directory and name it mock. Inside the folder, create a file name api.yaml

# api.yaml

swagger: "2.0"

info:

description: "This is a sum api provider"

version: "1.0.0"

title: "API Provider"

host: "https://mock-service.com/"

basePath: "/api"

schemes:

- "https"

- "http"

paths:

/sum:

get:

produces:

- "application/json"

parameters:

- name: "x"

in: "query"

description: "x value"

required: true

type: "integer"

- name: "y"

in: "query"

description: "y value"

required: true

type: "integer"

responses:

"200":

description: "successful operation"

schema:

type: "integer"

example: 3

To run this server based on the specification, we can use any mock service provider. I will be using a tool called prism. It has a docker image available, which we can add in our docker-compose file:

version: "3.0"

services:

db:

image: postgres

environment:

POSTGRES_USER: calculator

POSTGRES_PASSWORD: password

POSTGRES_DB: calculator_test

web:

build: .

ports:

- "3000:3000"

depends_on:

- db

- sumservice

environment:

CALCULATOR_DATABASE_PASSWORD: password

sumservice:

image: stoplight/prism:4

command: mock -h 0.0.0.0 "/tmp/api.yaml"

volumes:

- ./mock:/tmp/

ports:

- 4010:4010

init: true

Now, let's run the test and see if it’s working as expected:

> docker-compose exec web rails test

Finished in 0.337710s, 2.9611 runs/s, 5.9222 assertions/s.

1 runs, 2 assertions, 0 failures, 0 errors, 0 skips

Yeah! We now have the test running. The method connects to the sum service Docker image to get the sum and connects to Postgres to save the data. All the dependencies are managed by Docker Compose, so we do not need to worry about dependencies installed previously. Moving this test to any machine will work without any dependency installation required. The only thing that the machine needs is a docker.

If your application depends on any other dependencies, such as Redis or Memcached, these can be found in dockerhub. We can add these images to our Docker Compose file as needed.

Parallel Tests

Rails 5.1.5+ supports running tests in parallel. By default, the tests run in parallel from Rails 6 based on the number of processors. While running a test, it creates database instances based on the number of parallel tests. If we have four processors, it creates the databases "calculator_test-0", calculator_test-1, calculator_test-2, and calculator_test-3. If you go to the postgres cli and check the databases, you will see the following:

> \l

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

-------------------+------------+----------+------------+------------+---------------------------

calculator_test | calculator | UTF8 | en_US.utf8 | en_US.utf8 |

calculator_test-0 | calculator | UTF8 | en_US.utf8 | en_US.utf8 |

calculator_test-1 | calculator | UTF8 | en_US.utf8 | en_US.utf8 |

calculator_test-2 | calculator | UTF8 | en_US.utf8 | en_US.utf8 |

calculator_test-3 | calculator | UTF8 | en_US.utf8 | en_US.utf8 |

Now, even if you are not using Rails or mini-tests or have an older version of Rails, you still run tests in parallel if you write them with Docker. Rails automatically creates databases for parallel tests, but if there are any other dependencies, such as Redis or memcached, they might have to be managed manually.

Since we have all our dependencies managed, we can run Docker Compose with different arguments to run the tests in parallel. For this purpose, we can specify which folders we want to run tests on in parallel, execute create multiple Docker Compose files, and run them in parallel.

Adding Makefile to Simplify Commands

Since we have been using Docker and Docker Compose commands to run our tests, the commands are long. They can be difficult to remember and run while doing day-to-day development. To simplify it to a simple command, let us use add Makefile. Makefile is a simple text file used to reference target command execution.

.PHONY: test

up:

docker-compose up

down:

docker-compose down

test:

docker-compose exec web rails test

We have added a few commands to the makefile. This can be extended as required by the application. Add the makefile to the root directory of the Rails application. Once we have this makefile, we can simply run the test using the following command:

Boot the containers:

> make up

Run the test:

> make test

Using Docker for Local Development

We can also use Docker while developing the application. This helps to ensure that the application behaves consistently across testing, development, and production. Let us review what we need to change to use the above container for local development.

Volume Mount

While running the application locally, we want the application to reflect changes in the local server when we make changes in the code. In the current scenario, the code is copied from our machine to the Docker, and the server is started. So, if we make changes to the file in the local machine, they are not reflected in the Docker. To make sure that the code is always in sync, we can use volume mount.

In the Docker Compose file, let us add the volumes:

version: "3.0"

services:

db:

image: postgres

environment:

POSTGRES_USER: calculator

POSTGRES_PASSWORD: password

POSTGRES_DB: calculator_test

web:

build: .

volumes:

- .:/calculator # Volume mounted

ports:

- "3000:3000"

depends_on:

- db

- sumservice

environment:

CALCULATOR_DATABASE_PASSWORD: password

sumservice:

image: stoplight/prism:4

command: mock -h 0.0.0.0 "/tmp/api.yaml"

volumes:

- ./mock:/tmp/

ports:

- 4010:4010

init: true

Now, if we make any changes anything to the sever, the changes will be reflected. Let us try this by creating a controller and calling our sum method:

# controllers/calculation_controller.rb

class CalculationController < ApplicationController

def index

result = Calculation.sum(params[:x], params[:y])

render json: {result: result}

end

end

Add root route:

# routes.rb

Rails.application.routes.draw do

# For details on the DSL available within this file, see https://guides.rubyonrails.org/routing.html

root "calculation#index"

end

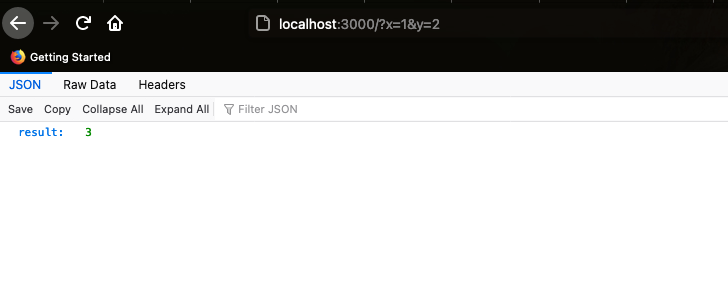

Now, if we go back and check the app in the browser, we get the expected response:

Note that we are starting the server using the mock sumservice, so regardless of what we provide in the URL as a query parameter, it will always return 2. This does not seem like a good way to do development but using a mock service provider has its advantages. When developing microservices, there can be a lot of dependent services, which we will need to boot up before starting the service. This helps increase productivity during development. We are not concerned with the other service and are only with the service that we are developing.

If we want to do a final verification of how it works with the actual service, we can do so by creating a new Docker Compose file and replacing it with the actual image of the service. If it is an internal service, we can simply replace it with the latest stable Docker image. I would recommend having a mock service even in the development environment.

.dockerignore

As the application grows larger, dependencies will cause the Docker build context to be larger. With a larger build context, it takes more time to build the docker image. Even the volume mount can cause a delay with the increased size of the application. In this case, we can add the unwanted file to the .dockerignore file to reduce the build context.

.git*

log/*

README.md

node_modules

Entrypoint

While a Rails server is running in a container, we sometimes get an error message stating that the server is already running because the PID file was not deleted when the container exited. To remove this error, we can add an ENTRYPOINT, which will remove the server.pid file.

FROM ruby:2.7

RUN curl -sS https://dl.yarnpkg.com/debian/pubkey.gpg | apt-key add -

RUN echo "deb https://dl.yarnpkg.com/debian/ stable main" | tee /etc/apt/sources.list.d/yarn.list

RUN apt-get update -qq && apt-get install -y nodejs yarn

RUN mkdir /calculator

WORKDIR /calculator

COPY Gemfile /calculator/Gemfile

COPY Gemfile.lock /calculator/Gemfile.lock

RUN bundle install

COPY package.json /calculator/package.json

COPY yarn.lock /calculator/yarn.lock

RUN yarn install --check-files

COPY . /calculator

EXPOSE 3000

ENTRYPOINT ./entrypoint.sh

CMD ["rails", "server", "-b", "0.0.0.0"]

Create the entrypoint.sh file:

#!/bin/bash

set -e

# Remove a potentially pre-existing server.pid for Rails.

rm -f /calculator/tmp/pids/server.pid

# Exec the container's main process (CMD command in rails file)

exec "$@"

Make it executable

chmod +x entrypoint.sh

Now, when we run the Docker image, we will not experience this issue.

Conclusion

It is very important to distinguish integration tests and unit tests. While writing unit tests, we can mock the external dependencies, such as databases. This will help test the code in isolation. It is also equally important to run integration tests between the core business logic and its dependencies. Dependencies, such as databases and external services, can be tested in integration by using Docker and Docker Compose. It is good practice to separate these two. We should be able to run them separately. Having more unit tests and fewer integration tests makes your tests run faster and more reliably.

Some advantages of this workflow are as follows:

- Segregation between unit and integration tests.

- Since all the CI services run on containers, the tests running locally and in CI will have the same behavior. This helps ensure the consistency of test behavior in the local machine and CI server.

- Using containers to deploy the application also helps ensure consistency in the production server.

- Reliable tests, as the dependencies are well managed.

- Portable tests, little effort in setup required when used by multiple developers.