Extracting data from databases is a major task of most Web applications. News websites, social networks, discussion forums, and your banking app have one thing in common: the need to retrieve information from a database. To make the process fast and efficient, we need to know how to structure not only the data but also information about where each record or a set of records are located. Int this article, we will dive into indexes, which hold all the necessary meta data to effectively query records from a database. This topic covers Rails’ basic approaches for effective queries using PostgreSQL as a database.

Indexes in real life

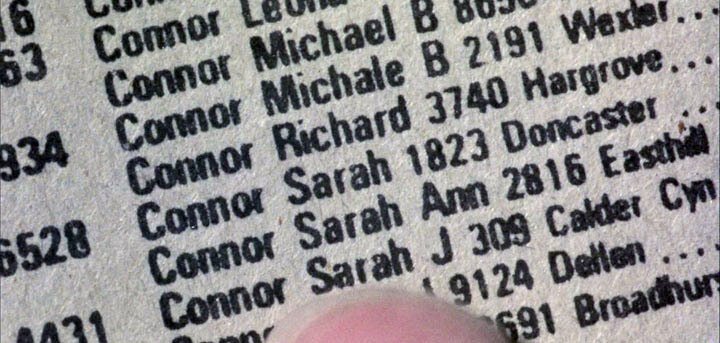

Imagine that it’s the 1980s, and you need to locate a name in a phone book. The name that you are looking for is Sarah Connor. Now, imagine that this phone book has millions of names and locations, and all these records are mixed and have no particular order. How much time would you spend locating all the Connors and then finding the right Connor. Fortunately, in real life, you don't have to deal with unorganized phone books.

Before the IT revolution, phone books were quite common. In phone books, all entries are listed in alphabetical order, so you can easily jump to a specific page and find your contact. In the "Terminator" movie, T-800, while trying to find Sarah Connor, navigates to the desired page and quickly finds all contacts named Sarah Connor to terminate them. This is a real-life index example. If it was all mixed up, even the Terminator itself would not be able to locate Sarah Connor. However, that would be a boring alternative.

There are many types of indexes (e.g., btree, hash, and gin), each designed to be used in specific situations. Computers are fast, so even with thousands of non-indexed records, you would not encounter performance issues. However, databases grow over time and can accumulate hundreds of thousands or even millions and billions of entries, so indexes are required in such situations. In this article, we will focus on common indexes, learn how to analyze their effectiveness, and choose suitable indexes for a specific situation in Rails.

Some Rails here

Let's take a look at a simple example of searching for a name in a phone book. We need to create a model User and perform all the necessary migrations. Run the following code to create a model and its corresponding migration file. Don't forget to perform the migration!

rails new phonebook_app --database=postgresql

rails db:create

rails g model User last_name:string first_name:string

rais db:migrate

class CreateUsers < ActiveRecord::Migration[6.1]

def change

create_table :users do |t|

t.string :last_name

t.string :first_name

t.timestamps

end

end

end

We need some data to work with and conduct performance testing. Download this seeds.rb file to populate our database. Put it in db/seeds.rb and run the rails db:seed command. It will create approximately 100,000 users with random names. If you want more records, just tune the file and increase the number of iterations.

It's time to conduct performance testing. Let's find all the Sarah Connors in our phone book.

User.where(last_name: "Connor", first_name: "Sarah")

# User Load (18.2ms) SELECT "users".* FROM "users" WHERE ...

This is called a "sequential scan"; each record in the database is checked to find and retrieve all entries with last_name Connor and first_name Sarah. My computer produces the results in about 16-25 ms. It's not very slow, but the amount of time required will increase linearly as the number of records increases. Moreover, the typical Web app makes several requests to a database during page rendering. All these milliseconds will add up and turn into seconds. However, indexes will help keep the amount of time required to a minimum.

Btree index

B-tree is the most used index type in relational databases. We will cover the practical aspect of this type of index, but if you are interested in theory, you can read great a article about it here. When you create an index in Rails without specifying its type, btree will be assigned by default. First, create migration and add two indexes for each column.

rails g migration add_indexes_to_users_last_and_first_name

class AddindexesToUsers < ActiveRecord::Migration[6.1]

def change

add_index :users, :last_name

add_index :users, :first_name

end

end

It's time for new types of tests!

User.where(last_name: "Connor", first_name: "Sarah")

# User Load (0.6ms) SELECT "users".* FROM "users" WHERE ...

With 100,000 entries, queries are 30-times faster! My computer takes 0.5-0.8 ms to perform such a query because a btree index requires O(log n) time, while a sequential table scan takes O(n) time. If you are unfamiliar with BigO notation, take a look at the Rubyist Guide to Big O Notation. After increasing the number of records in the database to 1,000,000, PostgreSQL takes 80 ms without indexes and 0.8 ms with indexes, which is 100-times faster! As you can see, btree index is much faster than a sequential scan, and the difference grows with the number of entries.

Your first impression may be that it's better to add indexes to every field in a database. However, first of all, only one of the defined indexes is working here (see the compound index section). Furthermore, indexes can slow down queries if there are not many records in the database, and each index takes up space in a computer's memory. Often, the size of the indexes may be larger than the amount of the data stored in a database. You can install the "rails-pg-extras" gem to get more information. I recommend that you carefully study the documentation of this gem, as it contains many useful functions that will make your work with the database as efficient as possible.

# Gemfile

gem "rails-pg-extras"

Now, go to console rails c and use these commands to get information about the index and table sizes:

RailsPGExtras.table_size

RailsPGExtras.index_size

In our case, the size of the indexes is not too big, but is btree indexing a good choice for our columns? Yes, if we are searching records for the full match. Many names are difficult to spell, and people expect to find them by partial match. Connor or Conor? Nah, please, give me all records with last name starting with "Con".

User.where("last_name ILIKE ?", "Con%")

# User Load (110.2ms) SELECT "users".* FROM "users" WHERE (last_name ILIKE 'Con%')

Although you can see lots of such code in applications, and columns are indexed using btree, many developers don't know when their indexes aren't working. Btree is unsuitable for partial matching, so our database will perform a full table scan. We need something different, a special index for such cases.

pg_trgm, GiST and GIN indexes

We should add the pg_trgm module to our PostgreSQL. This module helps in finding similarities in alphanumeric text based on trigram matching, as well as index operator classes that support fast searching for similar strings. What is a trigram, you might ask? A group of three consecutive characters taken from a string is defined as a trigram. By counting the number of shared trigrams, we can measure two strings' similarity. This simple idea appears to be very effective for measuring the similarity between words in many natural languages.

Let's break "Connor" into trigrams to better understand what it is: " c"," co",con,nno,nor,onn,"or ". An index should keep these trigrams and link them with our records. There is a trigram "Con", which is used in our query, and now, PostgreSQL can easily find all records with "Con" in their last_name. Before diving into indexes for such cases, let's add the extension for PostgreSQL using Rails migration.

rails g migration add_pg_trgm_extension

class AddPgTrgmExtension < ActiveRecord::Migration[6.1]

def change

enable_extension "pg_trgm"

end

end

Don't forget to run migration so that the extension will be enabled. To check whether it's enabled, go to the Postges console and run the pg_available_extensions function to get a list of all the available extensions; pg_trgm should be in the list.

psql -d your_database_name_development

SELECT * FROM pg_available_extensions;

Try taking any word and decomposing it into trigrams. When pg_trgm is enabled, you are able to use special functions from it. Check that your trigram word decomposition is correct.

SELECT show_trgm('Sarah');

This extension is designed to work with trigrams, but storing them is a job for GiST and GIN indexes. GIN and GIST have several differences. If you don’t know which one to choose, just remember a few rules: GIN searches quicker than GIST but is slower to update, and if you have a write-intensive table, use GIST. GIN is better for static data. Please be aware, however, that they don’t support exact matching with the equals operator! You can perform an exact match using like/ilike with no wildcards. If you want to use the equals operator where(last_name: "Connor"), you must create a standard BTREE index on the appropriate column.

Let's add GIN for first_name and GiST for the last_name column.

rails g migration add_gin_and_gist_indexes_to_users

class AddGinAndGistIndexesToUsers < ActiveRecord::Migration[6.1]

def change

add_index :users,

:first_name,

using: :gin,

opclass: 'gin_trgm_ops',

name: 'gin_index_users_on_first_name'

add_index :users,

:last_name,

using: :gist,

opclass: 'gist_trgm_ops',

name: 'gist_index_users_on_last_name'

end

end

We manually set the indexes’ names because there are already btree indexes on these columns(index_users_on_first_name and index_users_on_last_name). Run rails db:migrate and try performing our partial match query in the console.

User.where("last_name ILIKE ?", "Con%")

# User Load (3.5ms) SELECT "users".* FROM "users" WHERE (last_name ILIKE 'Con%')

This is much faster, and the indexes are working! Next, try first_name querying, where we have gin index, but numbers will be almost the same. For our table size, it doesn't matter; GiST and GIN have similar performance. However, for larger amounts of data, you should be more careful in choosing the appropriate index. It’s time to check the tables’ and indexes' sizes, and this my result for 1 million records in the users table:

RailsPGExtras.table_size

# +------------------------------------------------------------+

# | Size of the tables (excluding indexes), descending by size |

# +------------------------------+-----------------------------+

# | name | size |

# +------------------------------+-----------------------------+

# | users | 66 MB |

# | schema_migrations | 16 kB |

# | ar_internal_metadata | 16 kB |

# +------------------------------+-----------------------------+

RailsPGExtras.index_size

# +--------------------------------------------+

# | The size of indexes, descending by size |

# +-------------------------------+------------+

# | name | size |

# +-------------------------------+------------+

# | gist_index_users_on_last_name | 69 MB |

# | users_pkey | 21 MB |

# | gin_index_users_on_first_name | 19 MB |

# | index_users_on_first_name | 7168 kB |

# | index_users_on_last_name | 6880 kB |

# | ar_internal_metadata_pkey | 8192 bytes |

# | schema_migrations_pkey | 8192 bytes |

# +-------------------------------+------------+

As you can see, my data are 66 MB and GIN, and the GiST indexes are 69 MB and 19 MB. The indexes are starting to become larger than the data. The situation will become worse when your strings are longer and there are more trigrams. Be careful with these indexes and use them only if partial matching is often applied to some columns in the database.

Partial indexes

Tables and indexes are becoming increasingly larger. How can we decrease the memory footprint and continue to perform high-speed queries? It's time to add partial indexes. Partial indexes are incomplete and can return a subset of the data. In many cases, we perform similar queries. Imagine that we have a global phone book containing all of our users, but our customers are located in U.S., and 99% of them only search for U.S. contacts.

rails g migration add_country_to_users country:string

class AddCountryToUsers < ActiveRecord::Migration[6.1]

def change

add_column :users, :country, :string, default: 'US', limit: '2'

add_index :users, :country, where: "country = 'US'"

end

end

As you can see, we add the where option and defined a rule for when index should be applied. It will create a btree index for records with country equal to US. The index will be small (since it doesn’t include the global records) and fast, as well as have a lower memory footprint. However, this is a trade-off. When our customers search for records outside of the U.S., querying will be slower. In most cases, memory and computer clocks are cheaper than such nuisances, and you can avoid partial indexes. However, if you have a very large database and known query patterns, you can effectively use partial indexes.

I’ll share a suggestion that will help speed up your queries. In the migration example above, we're limiting our country column length to 2 characters because it's an ISO 3166-1 alpha-2 standard, and we don't need all 255 characters here, which is set by default. There is an old but useful article showing performance for different string lengths. Combining the appropriate indexes and string lengths will help speed up your queries.

Unique indexes

Unique indexes ensure that only one record can exist with such a value in a column. For example, in our phone book, we could have a Social Security Number (ssn) column, which is 9 digits in length and, more importantly, unique for every user.

add_index :users, :ssn, limit: 9, unique: true

When you query the ssn column, the database is confident that it has only one record with this value and doesn't perform additional useless manipulations after the first match. Furthermore, you get powerful protection against duplicates that Rails cannot provide on its own. If we refer to common uniqueness validation in a model, it's not enough. Many instances of your app are running in parallel and not synchronized between each other, which causes duplicates in the database. More information is available here.

validates :ssn, uniqueness: true # may not work

Compound (a.k.a. multicolumn, composite) indexes

Often, we query data using many columns. For example, as above, we query data using the first_name and last_name columns. Each column has its own index, but PostgreSQL utilizes only one of them in this case. It locates all records using an index matching query in one column and then iterates over all found records to filter and match the query for other columns. It's still fast because one index cuts a lot of records, and iterations of the rest are not a big deal.

However, sometimes, it's a good idea to create a compound index that indexes several columns. It's okay to create a compound index for last_name and first_name columns, but it is best for columns, when one of them has a Boolean type or variability in values is minimal. Let's take a look.

User.where(admin: false, last_name: "Connor")

# migration

class AddCompoundIndexToUsers < ActiveRecord::Migration[6.1]

def change

add_index :users, [:admin, :last_name]

end

end

All you need to do is pass an array of columns to the add_index method. Rails will create a compound index for you. It’s important to note that column order matters in an array. With a compound index, you can query data effectively, not only using two columns. Indexing will work for the admin field, too.

User.where(admin: false) # Compound index is working here!

EXPLAIN and ANALYZE

EXPLAIN and ANALYZE are available for PostgreSQL and MySQL databases. Topic is written using PostgreSQL syntax. Other relational databases may have different approaches for query analysis.

How do we know which indexes PostgreSQL is using or which indexes are not applicable to our query? We have already shown that with indexes, speed increases dramatically. However, what if your computer is just optimized for running sequential scans in parallel? Thus, because we need to prove that the indexes are working, how can we obtain information about index usage?

The most powerful tool at our disposal for understanding SQL queries and indexes is EXPLAIN ANALYZE, which is a Postgres command that accepts a statement, such as SELECT ..., UPDATE ..., or DELETE ..., executes the statement, and instead of returning the data, provides a query plan detailing what approach the planner took to execute the statement provided.

Let's repeat our first query.

User.where(last_name: "Connor", first_name: "Sarah")

This code will be processed by ActiveRecord and translated to an SQL query.

SELECT "users".* FROM "users" WHERE "users"."last_name" = "Connor" AND "users"."first_name" = "Sarah";

You can copy-paste it to the psql console, but first, expand it with the EXPLAIN ANALYZE command. Your code should look like this:

EXPLAIN ANALYZE SELECT "users".* FROM "users" WHERE "users"."last_name" = "Connor" AND "users"."first_name" = "Sarah";

Let's take a look at the output. The most important part for us is which index is applied in our query plan. As we can see, PostgreSQL is using index_users_on_last_name index for this query. It's our btree index for the last_name column. However, the index for the first name is not used here, despite first_name being present in the where clause. PostgreSQL uses the most appropriate one at its discretion.

Index Scan using index_users_on_last_name on users (cost=0.42..8.45 rows=1 width=49) (actual time=0.615..0.652 rows=20 loops=1)

Now it's time to see how a sequential scan is performed. We have not indexed the created_at and updated_at columns in our users table. This query extracts all users whose accounts were created before 2020.

User.where(created_at: ..Time.new(2020, 1, 1))

# User Load (98.6ms) SELECT "users".* FROM "users" WHERE "users"."created_

SQL looks like this:

SELECT "users".* FROM "users" WHERE "users"."created_at" <= '2019-12-31 18:00:00';

Don't be confused by a possible time shift for created_at, which Rails is performing. It's due to the time zone in which you are located.

If we add EXPLAIN ANALYZE, we can see that a sequential scan is performed. However, there is a bit more information than we can see with an index scan. Starting from PostgreSQL 9.6, sequential scans can be performed in parallel. Such an approach substantially increases the speed of sequential scans. What is important for us here is that we can see that this index is not used, and we probably need to add it.

Workers Planned: 2

Workers Launched: 2

-> Parallel Seq Scan on users (cost=0.00..13716.39 rows=1 width=49) (actual time=142.399..142.400 rows=0 loops=3)

Index-only scan

When querying data with an index, PostgreSQL uses it to find data and then goes to the table and retrieves all the data. Starting with PostgreSQL 9.2, you can perform an index-only scan, which means that PostgreSQL uses an index to retrieve data without diving into the table itself. This is another dramatic performance step, decreasing I/O operations! Although there are some constraints, you can retrieve data stored in an index. Here is an example:

User.where(last_name: "Connor").pluck(:last_name)

It will create a query that only retrieves last_name.

SELECT "users"."last_name" FROM "users" WHERE "users"."last_name" = 'Connor';

We have an index for this column, and if you add EXPLAIN ANALYZE to it, you'll see that PostgreSQL is performing an index-only scan! This approach works for compound indexes too.

Index Only Scan using index_users_on_last_name on public.users (cost=0.42..8.44 rows=1 width=7) (actual time=3.794..3.810 rows=20 loops=1)

Wrapping up

The following tips will help keep your queries effective.

Small, fixed-size tables are not a good choice for index use. Small tables will not show a significant performance effect. However, consider that small tables may grow over time, such as in the number of users. For instance, tables like 'continents' will remain small, because there are only six of them, and indexes are useless here unless, of course, the tectonic plates go crazy.

Use a unique index along with

validates uniqueness: truebecause it will ensure 100% protection from duplications, while Rails cannot ensure it alone.Don't use a btree index for a partial patch. Use the pg_trgm extension with GIN GiST indexes for such queries.

Choose high-write over low-read tables when using indexes. The downside of indexes is that they degrade write performance; however, they improve read performance.

Composite indexes would be a good choice if certain fields appear together in queries. For instance, in our users example above, it's preferable to create a composite index of (last_name, first_name).

Do not overcomplicate index implementation if you are planning to create an index on field1 and a composite index on (fieild1, fieild2). A composite index on (fieild1, fieild2) will work just fine because we can use it for querying field1 only.

Conclusion

Congrats. Now you are more confident with data querying in Rails with PostgreSQL. We've covered the basics of effective data querying to help avoid a lot of performance issues. Of course, there are many cases where a deeper understanding of the structure of databases and indexes is required, but in most cases, the information contained herein will be sufficient.