In the past few years, the tools we use to operate backend systems have evolved a lot. Both Docker and Kubernetes have shot up in popularity. Ruby on Rails (or simply Rails) has been making developers’ lives easier since 2004. To put things in context, Node.js was released in 2009, and version 1.0 of the Go language was released in 2012. Similarly, Docker was made public in 2013, and the initial release of Kubernetes dates to 2014.

In this post, we will deploy a simple Rails API on Kubernetes that works on your local. We will keep the scope small, as this post aims to show a minimum variable way to make a Rails app run on Kubernetes in a dev environment. Let’s get the ball rolling.

What is Kubernetes?

Like many other technologies, Kubernetes means different things to different groups of people. To begin, let’s have a look at the official definition from Kubernetes.io:

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation.

The name Kubernetes originates from Greek, meaning helmsman or pilot. Thus, Kubernetes is a pilot that manages multiple workloads or containers consistently and effectively. Kubernetes is a container orchestrator that can efficiently manage 100s or even 1,000s of containers. It makes it easy to deploy, operate, and manage a variety of workloads by abstracting out the underlying infrastructure and giving the operators an orderly and battle-tested API to work with.

I know it is still buzzword heavy, so I recommend watching this video; it beautifully explains Kubernetes to children.

What does Kubernetes do?

Let’s take a step back and try to figure out what Kubernetes does. We can safely say this is the era of deploying containers for a variety of workloads, from web applications and cron jobs to resource-intensive AI/ML tasks. In the container landscape, Docker has won the race, or maybe it had the first-mover advantage.

With this in mind, we might begin small with a couple of our apps running on containers. This equates to having 2-10 containers running at any given time. We pilot this idea, we play a bit more with it, and we start liking the good things containers provide. We add 3-4 more apps on the container bandwagon.

A bit more time passes, and before we know it, we have 10s of applications in containers, resulting in 100s of containers running. The question of how to start or stop these containers arises. How do we scale them and manage resources (CPU/RAM) for them? How do we make sure that X number of containers are always running for application Y. How do we make service A talk to service B efficiently?

The answer to all of these questions is a container orchestrator. Kubernetes beats all of its competitors in the container orchestration space. By mid-2017, it had become the de facto choice in this space. Next, we will jump into how to deploy a Ruby on Rails application on a local Kubernetes cluster.

Example application

For this post, we will be using a Bands API Rails app. It was originally coded by Andy, who has published videos and a blog post on how the app was created. The Ruby and Rails code is all his work. I would like to thank him for his great work.

I have forked his original repository and dockerized it so that the app can be deployed on Kubernetes. To keep things simple, I have also replaced the SQLite database with a PostgreSQL database running on Elephant SQL free plan. We will not be running a database on Kubernetes in this tutorial.

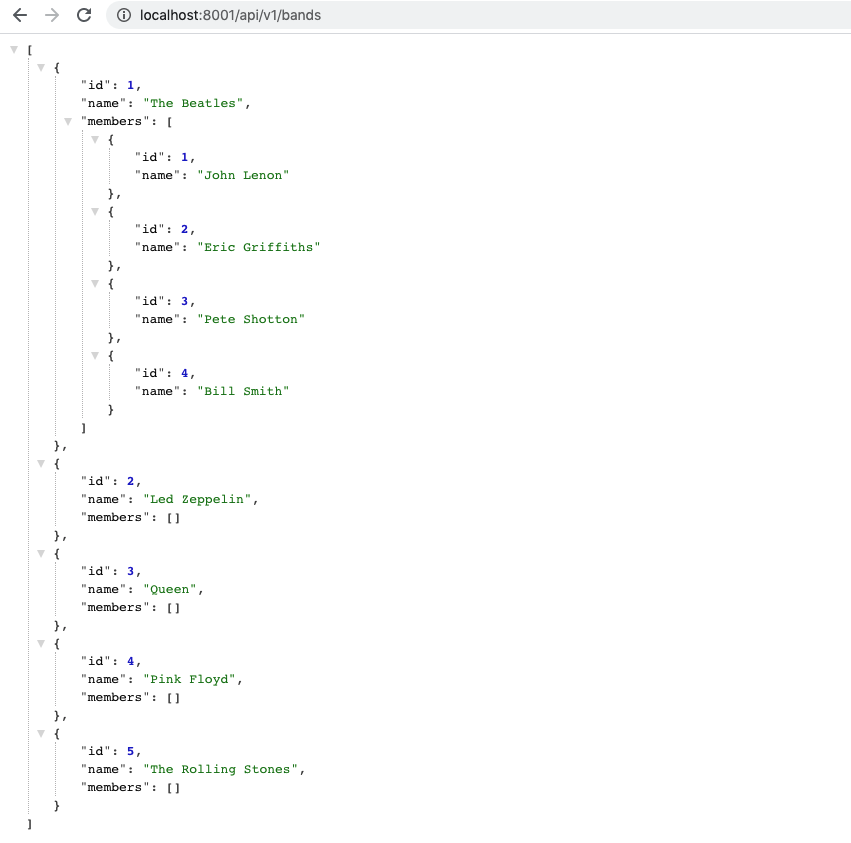

From a functional viewpoint, the main API endpoint we are concerned with for the scope of this tutorial is the GET bands endpoint available at /api/v1/bands, which should give back 5 bands. Next, we will describe the Kubernetes artifacts we will use to deploy this Rails API to Kubernetes.

Kubernetes deployment

A Kubernetes deployment is a resource object in Kubernetes that gives us a way to update the application in a declarative way. It enables us to describe an application’s life cycle, such as the images to use for the application, the ports for the container, and any necessary health checks.

The deployment process generally includes making the new version available, directing traffic from the old to the new version, and stopping the old versions. Capistrano has been doing this since 2006. However, what makes Kubernetes deployments better is the minimum number of pods required, and its rollout strategy minimizes or eliminates downtime. For example, a rolling update strategy can ensure new pods gradually replace old pods with configs like maxSurge and maxUnavailable. Because this is done in a declarative way, as a user or operator, you only need to ask Kubernetes to apply a given deployment and Kubernetes does the rest. Next up is the Kubernetes config map.

What is a K8s config map?

A Kubernetes config map is a dictionary of configuration or settings expressed as key-value pairs of strings. Kubernetes passes these values to the containers. Following the twelve-factor application, we can separate our config from the application code using a Kubernetes Config map. Config maps can be changed depending on the environment, such as staging or production.

A Kubernetes config map is generally used to store non-confidential data, but depending on the particular use case, sensitive data can also be put into them. Another prescribed way to handle sensitive data is by using Kubernetes Secret. If you want, reading more about Kubernetes Configmap would be useful.

We will be using a config map to inject values, including database credentials and other environment variables for the Rails app, such as RAILS_ENV and SECRET_KEY_BASE.

Kubernetes job

A Kubernetes job creates a transient pod that performs a given task. A job is well suited to run a batch process or important ad-hoc task. Jobs run a specified task until completion, which is different from a deployment that runs on a desired state. When a specified number of pods reach completion, the job is said to be successful. The success criteria for termination is defined in the job’s YAML file.

A job created on a repeated schedule is a Kubernetes cron job.

We will be utilizing the Kubernetes job in our Rails Bands API application to run the rails db-migrate command so that the database schema is in the latest version. Next, we will get our hands dirty by deploying the Bands API application to our local Kubernetes cluster on KIND.

Prerequisites

Before we move on to typing commands and running Kubernetes on your local machine, you should do the following:

- Be aware of how Docker works generally and have it installed and running locally

- Have basic knowledge of how Kubernetes works.

- Be using a Unix-like (*nix) operating system, as I am unsure how well Docker works on windows.

- Have Kubectl installed and working on your local.

- Be aware of how Kind works and have it installed; in my case, the

brew install kindcommand was used to get Kind 0.9.0.

With these things out of the way, let’s carry on and deploy our Rails Bands API to Kubernetes.

Deploy our Rails API to Kubernetes

To deploy our Bands API application built on Rails and Postgres, we will need to clone the GitHub repository by executing the following command:

git clone git@github.com:geshan/band_api.git

After it is cloned we can go into the directory with:

cd bands_api

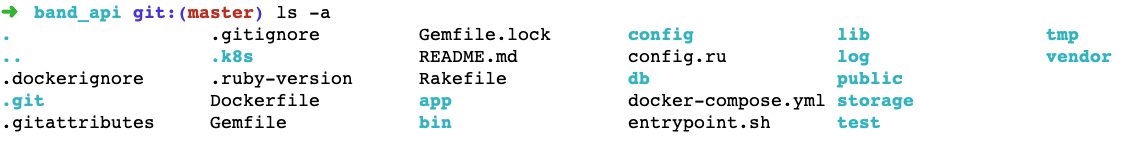

If we list the files in that bands_api directory, we should see something like below:

The two main things to note here are the Dockerfile and .k8s folder containing the Kubernetes YAML files.

In the next step, we will install the Kind Kubernetes cluster.

Start a local Kind Kubernetes cluster

To Install a local Kubernetes cluster with Kind, we will execute the following command:

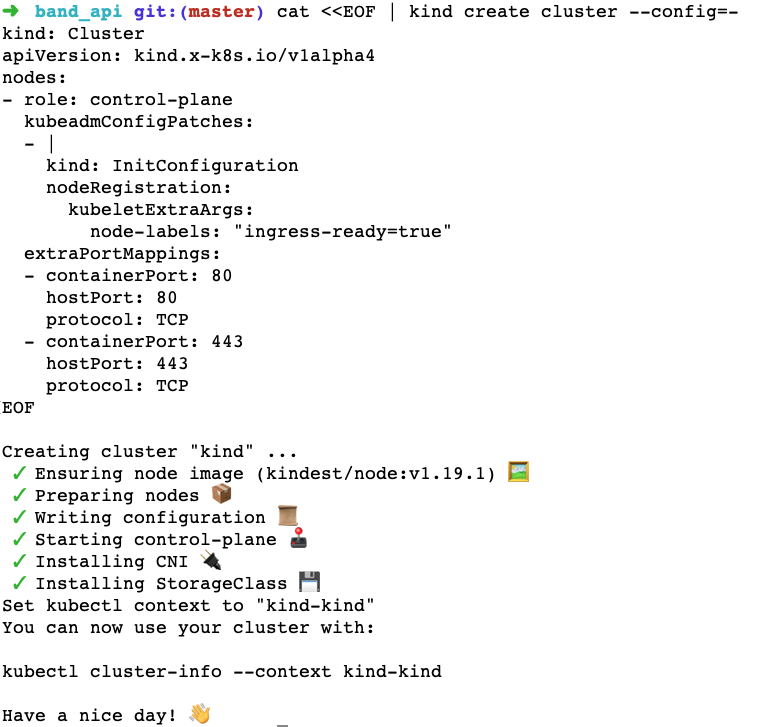

cat <<EOF | kind create cluster --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "ingress-ready=true"

extraPortMappings:

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

EOF

This creates a kind Kubernetes cluster with ports 80 and 443 from the container mapping to your local machine.

It should take a couple of minutes and come back with output like the following:

Congrats! Our local Kubernetes cluster is running inside a Docker container thanks to Kind.

For the next step, we will configure our kubectl to use the Kubernetes cluster we just created by running the following code:

kubectl cluster-info --context kind-kind

Next, we will deploy our Bands API app to our local Kubernetes cluster.

Deploy our Rails app to the Kubernetes cluster

To deploy our Rails Bands API app to the Kubernetes cluster we created in the previous step, we will need to run kubectl apply to the 3 files in our .k8s folder. These three files are discussed in the following paragraphs.

ConfigMap

The first file in our .k8s folder is the configmap.yaml file, which has the following contents:

apiVersion: v1

kind: ConfigMap

metadata:

name: bands-api

data:

PORT: "3000"

RACK_ENV: production

RAILS_ENV: production

RAILS_LOG_TO_STDOUT: "true"

RAILS_SERVE_STATIC_FILES: "true"

SECRET_KEY_BASE: "top-secret"

DATABASE_NAME: "zrgyfclw"

DATABASE_USER: "zrgyfclw"

DATABASE_PASSWORD: “secret”

DATABASE_HOST: "topsy.db.elephantsql.com"

DATABASE_PORT: "5432"

In addition to the version and the kind of Kubernetes artifact, this file basically lists all the configs that will be available in the pods, in the form of environment variables. These configs include Rails-specific variables and database credentials.

Database migrate job

The second file in the .k8s folder is the database migrate job:

apiVersion: batch/v1

kind: Job

metadata:

name: bands-api-migrate

labels:

app.kubernetes.io/name: bands-api-migrate

spec:

template:

metadata:

labels:

app.kubernetes.io/name: bands-api-migrate

spec:

containers:

- command:

- rails

- db:migrate

envFrom:

- configMapRef:

name: bands-api

image: docker.io/geshan/band-api:latest

imagePullPolicy: IfNotPresent

name: main

restartPolicy: Never

Some things to make note of in this Kubernetes job is that it is using the Docker image available on DockerHub at docker.io/geshan/band-api:latest. I have specifically pushed this image so that it is available for this demo application. Depending on the type of application, a Docker image should be pushed into a private Docker repository.

The other thing to note is that it is using the config maps discussed in the previous section. Next, we will use the deployment file. It runs the rails db:migrate command to ensure that the seeds are applied to the database.

Deployment

The deployment file brings up the web server for Rails that serves up the Bands API. The content of the deployment.yaml file is shown below:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: bands-api

process: web

name: bands-api-web

spec:

selector:

matchLabels:

app.kubernetes.io/name: bands-api

process: web

template:

metadata:

labels:

app.kubernetes.io/name: bands-api

process: web

spec:

containers:

- env:

- name: PORT

value: "3000"

envFrom:

- configMapRef:

name: bands-api

image: docker.io/geshan/band-api:latest

imagePullPolicy: IfNotPresent

name: bands-api-web

ports:

- containerPort: 3000

name: http

protocol: TCP

readinessProbe:

httpGet:

path: /

port: 3000

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 2

Note that it uses the same Docker image as the job. The application runs on port 3000. To check whether the application is ready, a call is made to the root route /; if it returns a 200 on port 3000, the pod will be considered healthy and traffic ready. The pod is expected to start within 10 seconds.

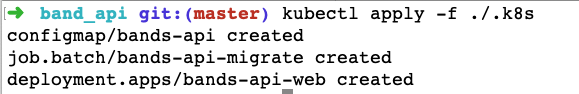

We can apply these files to create and deploy the band_api application on our Kubernetes cluster with the following code:

kubectl apply -f ./.k8s

This will result in the following output:

All three Kubernetes objects have been successfully applied to our Kubernetes cluster. We can proceed to test the app.

Test the app locally

To test the Bands API application, we can run the following command:

kubectl port-forward deployment/bands-api-web 8001:3000

The above command basically tells to forward the local port 8001 to port 3000 for the pod(s) running for the deployment bands-api-web. You can read more about Kubectl port forwarding here.

After running the command, if we hit http://localhost:8001/api/v1/bands, we should see something like below:

Hurray! Our Rails app is running successfully in the local Kubernetes cluster. There are other ways to test the app, but this is the easiest way.

Next steps

In this post, we have only scratched the surface of Kubernetes’ capabilities. I have deliberately left out the service part that enables exposing an application running as a set of pods as a network service. If you are already itchy about Kubernetes services, try to understand them visually or wait for the next part.

Conclusion

We have seen how you can deploy a simple Rails app on a local Kubernetes. This tutorial’s scope is just enough to understand how to run an app, but in a local context. It is very important to understand these concepts and then build on them.

This will help you understand more about how Kubernetes functions. After understanding how Kubernetes works with deployment, config map, and job, you can step into other Kubernetes artifacts, such as service and cronJob. We will cover them in the next part, so stay tuned!