When an application runs, it performs a tremendous number of tasks, with many happening behind the scenes. Even a simple to-do application has more than you'd expect. The app will at a bare minimum have tons of tasks like user logins, creating to-dos, updating to-dos, deleting to-dos, and duplicating to-dos. These tasks the application performs can result in success or potentially result in some errors.

For anything you're running that has users, you'll need to at least consider monitoring events happening so that they can be analyzed to identify bottlenecks in the performance of the application. This is where logging is useful. Without logging, it's impossible to have insight or observability into what your application is actually doing.

In this article, you'll learn how to create logs in a Python application using the Python logging module. Logging can help Python developers of all experience levels develop and analyze an application's performance more quickly. Let's dig in!

What is logging in Python?

Logging is the process of storing the details about events that happen in an application. Logs provide an additional way to check out the flow that an application is growing through. It is the historical record of the state of the application.

For instance, if an application crashes, it becomes difficult to track the issue without any details of what happened before the crash. In such a case, logging ideally provides a log trace, which can help developers detect the cause of the issue by going through the logs and recreating the actual scenario on their machine. With a properly set up logging system, you can detect the cause of errors to the accuracy level of the line number.

It's hard to get your log messages exactly right. You'll have to balance logging enough information to provide useful insight with not logging so much that you can't find that useful insight.

When and why should developers use logging?

If you are a developer, you have probably used print statements to debug your Python application. It's everyone's first method of logging, even if it's just when you write "Hello world!" This is the most basic logging level.

When trying to obtain information for debugging your application, you need much more information than you might think, such as timestamps, related modules, and types. Sometimes this extra information is called metadata.

You can use the print command in a small application, but this strategy quickly becomes unwieldy in a large, complex application in which multiple modules are communicating and sharing data with each other.

To resolve this issue, you need a well-built logging module that can write all kinds of information (metadata!) related to your application to one of your output streams (e.g., a console or log file) in a structured and predictable manner.

The different levels of Python logging

In Python, the logging module provides a powerful framework for log messages, with five standard levels that indicate the severity of an event. By going beyond the basic logging level, these levels help software engineers filter logs effectively. Let’s explore each logging level in detail so you have an idea of when and how to use them.

Debug (10)

The DEBUG level is the lowest severity level and is primarily used for diagnostic purposes. Messages logged at this level contain detailed information that is typically useful only during development or while troubleshooting specific issues.

This is an appropriate logging level to track the value of a variable during the execution of a confusing section of code or even to debug changing data as it moves through your application. Unless it's absolutely necessary, production Python applications often don't persist debug logs.

Info (20)

The INFO level represents general, operational messages about the flow of your application. These messages are meant to provide a high-level trace of what the application is doing without delving into the details.

Some examples of good usage of the INFO log level are for logging the start and completion of a background job or maybe successful API calls or database connections.

Warning (30)

The WARNING level is used when something unexpected happens. Usually, it's reserved for logging an event occurring that could potentially lead to issues. Warnings do not necessarily require immediate attention but should be reviewed to prevent future problems. You'll often see warning logs indicating things like recoverable errors, network failures, or deprecated method calls.

Error (40)

The ERROR level indicates a serious issue that prevents part of the application from functioning correctly. These logs should provide enough detail to diagnose and address the problem.

Some examples of problems that warrant the use of the ERROR log level are things like failed database queries or unhandled exceptions.

Critical (50)

The CRITICAL level is the highest Python log severity level and is reserved for very serious errors that may cause the entire application to fail. These logs should ideally be accompanied by alerts or notifications (like via Honeybadger!) to ensure they are addressed promptly.

Configuring Python log levels

Python’s logging module allows you to configure the minimum severity level for log messages. Based on the severity of the event, you can create a log or even configure the logger to only log events either below or above a particular severity level.

In the later sections of this article, you'll learn how to use these severity levels in the logging module.

Using the logging module in Python

To understand the concept of logging in Python, create a project directory (python-logging) by running the following commands in the terminal:

mdkir python-logging

cd python-logging

In the python-logging directory, create a main.py file and add the following code to it:

# 1

import logging

# 2

logging.debug('This is a debug message')

logging.info('This is a info message')

logging.warning('This is a warning message')

logging.error('This is an error message')

logging.critical('This is a critical message')

In the above code, you are performing the following:

- You are importing Python's inbuilt

loggingmodule. - You are using the

loggingmodule helper functions to print the logs related to five severity levels on the console window.

Run the main.py file by running the following command in your terminal:

python main.py

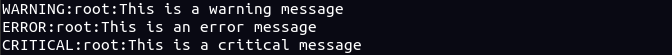

If you see the output in the terminal window, you'll see that logs related to only the warning, error, and critical severity levels were printed.

This is because, by default, the logging module only prints logs with a severity level equal to or greater than that of the warning.

Configuring a Python log severity level

To override the default severity level, you can configure the logging module by using the basicConfig function and passing the level parameter to it. To do so, update your existing main.py file to include the following:

import logging

logging.basicConfig(level=logging.DEBUG) # added

logging.debug('This is a debug message')

logging.info('This is a info message')

logging.warning('This is a warning message')

logging.error('This is an error message')

logging.critical('This is a critical message')

In the above code, you are using the basicConfig function to configure the logging module's settings. You have specified the level parameter as logging.DEBUG, which configures the logging module to log all messages that have a severity level equal to or greater than the debug level.

Run the main.py file by running the following command in the terminal:

python main.py

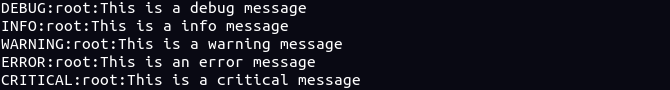

This time, you'll see all the logs printed on your terminal window.

Logs of all severity levels created by the Logging module.

Logs of all severity levels created by the Logging module.

Based on your individual situation's requirements, you can configure the severity level of your application. For example, while developing the application, you can set the level parameter to logging.DEBUG, and in a production environment, you can set it to logging.INFO.

Writing logs to a log file in Python

When you are developing an application, it is convenient and straightforward to just write the logs to the console. However, in a non-development environment, you almost always want a way to store the application logs persistently. To accomplish this, it is common to store your logs in a log file. This log file can be accessed at any time and used to debug issues or extract all kinds of information related to the events happening in your Python application.

How to create a log file in Python

To create a log file in Python, you can use filename and filemode parameters in the basicConfig function.

Replace the contents of the main.py file with the following code:

import logging

# 1

logging.basicConfig(filename='app.log', filemode='w', level=logging.DEBUG)

logging.warning('This is a warning message')

logging.debug('This is a debug message')

In the above code, you are specifying the filename and filemode parameters in the basicConfig function. This configuration creates an app.log file at the root of your Python application and stores all the logs in it rather than printing them to the console window. The filemode parameter should be in either write (w) or append (a) mode to give permission to Python to create and write log files.

An important thing to note here is that the write mode will recreate the log file every time the application is run, and append mode will add the logs to the end of the log file. The append mode is the default mode.

Run the main.py file by running the following command in the terminal:

python main.py

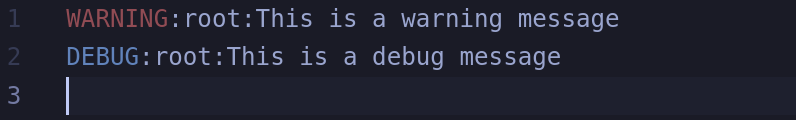

Next, check for the app.log file at the root of your application. You'll see the following log messages:

Logs are stored in a log file.

Using multiple log files

For complex applications, you might want to direct logs from different components to separate files for better organization and easier parsing. This can be achieved without too much extra setup by simply using multiple handlers.

import logging

first_logger = logging.getLogger('first')

second_logger = logging.getLogger('second')

# Add handlers

first_handler = logging.FileHandler('first.log')

second_handler = logging.FileHandler('second.log')

first_logger.addHandler(first_handler)

second_logger.addHandler(second_handler)

# Log messages to the different loggers (and log files)

first_logger.info("Application started")

second_logger.error("An error occurred")

With this extra configuration, you can set up a robust and scalable logging system that caters to the specific needs of your Python application.

Customizing the log message format

You can log more information about the events happening in your Python application by using the format parameter in the basicConfig function. The format parameter allows you to customize your log message format and include more information if required, with the help of a huge list of attributes. For example, you can include a timestamp in your log message.

To do so, update the main.py file by replacing its content with the following code:

import logging

# 1

logging.basicConfig(format='%(asctime)s - %(levelname)s - %(name)s - %(message)s')

logging.info('This is a custom format log')

In the above code, keep in mind that the format parameter takes a string in which you can use LogRecord attributes in any arrangement you like. The %(asctime)s attribute adds the current timestamp to the log message.

Run the above code by executing the following command in the terminal:

python main.py

In the terminal window, you'll see the log message with the timestamp information and a custom format:

Exploring some other LogRecord attributes

The format parameter supports various LogRecord attributes, enabling you to include critical details in your log messages. Here are some commonly used attributes:

%(asctime)s: Adds the timestamp of when the log record was created to the log.%(levelname)s: Adds the severity level (DEBUG,INFO, etc.) of the log to the log itself.%(name)s: Adds the name of the logger used to the log, which is particularly helpful if you're logging to multiple files.%(funcName)s: Adds the name of the function that was executing when the log was made%(module)s: Logs the module name where the logging call was made.%(lineno)d: Logs the line number in the source code where the logging call was made.

You can mix and match these attributes to create a format that best suits your application's needs.

A detailed Python logging example

Now that you have an idea of how logging works in Python, let's extend the examples to an actual Python program and see how logging fits in. In this example, you'll see how to use the logging.error function in the try-except block.

Example 1 - Using logging.error in a try-except

In the main.py file, replace the existing code with the following:

import logging

logging.basicConfig(

format='%(asctime)s - %(levelname)s - %(name)s - %(message)s')

# 1

a = 10

b = 'hello world'

# 2

try:

c = a / b

# 3

except Exception as e:

logging.error(e)

In the above code, you are performing the following:

- You are declaring two variables, an int

aand a stringb. - In the

tryblock of thetry-expectstatement, you are performing a division operation between an int (a) and a string (b). As you probably already know, this is not a valid operation and will cause an exception. - In the

expectblock, you are catching the exception (e) and logging it using thelogging.errorfunction.

Run the above code by executing the following command in the terminal:

python main.py

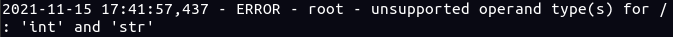

In the terminal window, you'll see a custom-formatted log message stating the unsupported operation error:

2025-01-10 03:47:44,070 - ERROR - root - unsupported operand type(s) for /: 'int' and 'str'

Example 2 - Logging with multiple handlers

For this example, modify your program to log messages to both the console and a file:

import logging

console_handler = logging.StreamHandler()

file_handler = logging.FileHandler('combined.log')

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s',

handlers=[console_handler, file_handler]

)

logging.info("This message goes to both console and file.")

logging.error("This is an error message logged to both.")

This setup demonstrates how to send logs to multiple destinations simultaneously. It also shows you the more advanced formatting discussed in the previous section. Executing this example will show you something like:

2025-01-10 03:46:36,930 - INFO - This message goes to both console and file.

2025-01-10 03:46:36,931 - ERROR - This is an error message logged to both.

Example 3 - Adding additional context to logs

You can include additional context in your log messages by customizing the log format so that you don't have to manually include that information when you call a log method. Check out this example:

import logging

logging.basicConfig(

format='%(asctime)s - %(levelname)s - %(name)s - %(module)s:%(lineno)d - %(message)s',

level=logging.DEBUG

)

def divide(a, b):

try:

return a / b

except Exception as e:

logging.error("Error dividing %d by %d: %s", a, b, e)

raise

logging.info("Starting division function")

divide(10, 0)

The output includes the module and line number, making it easier to pinpoint the source of the log. Executing this code will show you something like:

2025-01-10 03:44:22,316 - INFO - root - main:15 - Starting division function

2025-01-10 03:44:22,379 - ERROR - root - main:12 - Error dividing 10 by 0: division by zero

Traceback (most recent call last):

File "main.py", line 16, in <module>

divide(10, 0)

File "main.py", line 10, in divide

return a / b

ZeroDivisionError: division by zero

Logging in Django

Besides Python's built-in logging library, there are also third-party logging libraries that you can use in Python applications. If you're using a Python library or framework like Django or Flask, you'll be particularly interested in this section.

Django is the most popular Python-based web application development framework out there. Internally, Django uses the Python logging module but allows the developer to customize the logging module settings by configuring the LOGGING config variable in the settings.py file.

A simple Django logging configuration

For example, the following configuration writes all log output to the debug.log file at the root of the application:

LOGGING = {

'version': 1,

'handlers': {

'file': {

'level': 'DEBUG',

'class': 'logging.FileHandler',

'filename': 'debug.log'

}

},

'loggers': {

'django': {

'handlers': [

'file'

],

'level': 'DEBUG'

}

}

}

This setting updates the logs generated by the Django Root Module, Django Server, Django Web Requests, and Django database queries. Setting the version specififies the version of the configuration schema. The handlers section defines where and how log messages should be sent, much like configuring handlers in vanilla Python. Meanwhile the loggers section defines logger behavior (this example just defines the django handler).

A Django example that uses console logging

If you're doing development, you may want to see logs in a console stream instead of having to tail a log file. Fortunately, you can change your configuration to do this in the same file. Check out this example:

LOGGING = {

'version': 1,

'handlers': {

'console': {

'level': 'DEBUG',

'class': 'logging.StreamHandler',

},

},

'loggers': {

'django': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': True,

},

},

}

A Django example that rotates the log file

In production, you'll almost always want to rotate your log file to prevent it from becoming so large that it consumes all the available space. Django lets you easily do this by using the RotatingFileHandler like in this example:

LOGGING = {

'version': 1,

'handlers': {

'rotating_file': {

'level': 'INFO',

'class': 'logging.handlers.RotatingFileHandler',

'filename': 'app.log',

'maxBytes': 1024*1024*5, # 5MB

'backupCount': 5,

'formatter': 'verbose',

},

},

'loggers': {

'django': {

'handlers': ['console'],

'level': 'DEBUG',

'propagate': True,

},

},

}

It's pretty nice to be able to manage all of that log configuration in one place - another win for frameworks! For more information on configuring logging in Django, check out Django’s documentation.

Logging in Flask

Flask, like Django, is also a Python-based Web application development framework, but it is smaller in size than Django. It comes with logging configuration that's just as useful as Django's. It also uses the built-in Python logging module and provides an app.logger function to configure log settings.

A basic Flask logging configuration

For example, to send logs to the console in the JSON format, you can use the following configuration in a Flask app before initializing the app:

from logging.config import dictConfig

dictConfig({

'version': 1,

'formatters': {

'json': {

'()': 'pythonjsonlogger.jsonlogger.JsonFormatter'

}

},

'handlers': {

'console': {

'level': 'DEBUG',

'class': 'logging.StreamHandler',

'formatter': 'json'

},

},

'root': {

'level': 'INFO',

'handlers': ['console']

}

})

app = Flask(__name__)

For more information regarding logging in Flask, you can check out Flask’s logging documentation.

Storing logs in JSON Format

Instead of logging the events in a plain text format, you might want to output them as JSON objects. With the JSON format, you can easily add records to a database or query them using the JSON-based query language. Using JSON for logs allows you to parse them more easily. Many developers find at least basic structuring like this pays huge dividends when looking through logs. To accomplish this, you can utilize the python-json-logger library to store logs in JSON format.

To install this package in your Python application, run the following command in the terminal:

pip install python-json-logger

The basic code to start using this library is as follows:

import logging

import json_log_formatter

formatter = json_log_formatter.JSONFormatter()

json_handler = logging.FileHandler(filename='log.json')

json_handler.setFormatter(formatter)

logger = logging.getLogger('my_json')

logger.addHandler(json_handler)

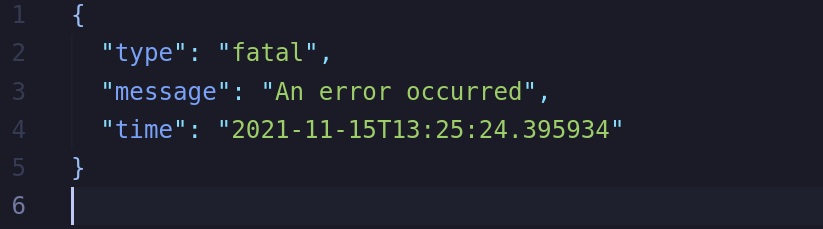

logger.error('An error occurred', extra={'type':'fatal'})

This package creates JSON logs, as shown in the following image:

Adding Python logging to your own applications

It is very important to monitor events occurring in an application, and one of the most recommended ways is to use logging. Without keeping track of what's happening in your application and how the users are utilizing it, you will never be able to identify performance bottlenecks. There is a saying that you can only improve what you can measure. If you are unable to improve your system, you will lose customers over time. Therefore, it is recommended to use logging in your applications. Python logging is approachable and useful, so why not give it a shot today?

If you are looking for a robust, cloud-based system for real-time monitoring, error tracking, and exception-catching, you might love Honeybadger. You can use it with any framework or language, including Python, Ruby, JavaScript, and PHP.